Abstract

Recognizing and comprehending human actions and gestures is a crucial perception requirement for robots to interact with humans and carry out tasks in diverse domains, including service robotics, healthcare, and manufacturing.Event cameras, with their ability to capture fast-moving objects at a high temporal resolution, offer new opportunities compared to standard action recognition in RGB videos. However, previous research on event camera action recognition has primarily focused on sensor-specific network architectures and image encoding, which may not be suitable for new sensors and limit the use of recent advancement in transformer-based architectures.

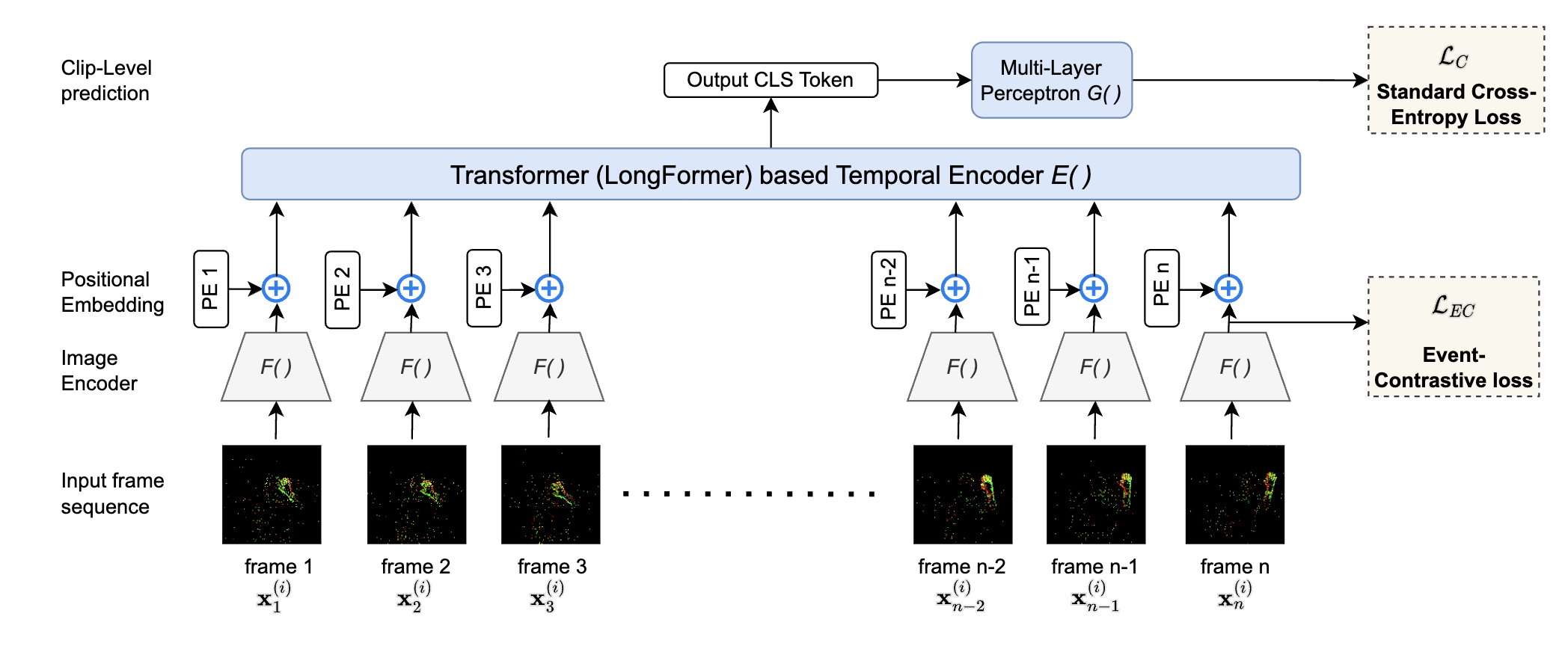

In this study, we employ using a computationally efficient model, namely the video transformer network (VTN), which initially acquires spatial embeddings per event- frame and then utilizes a temporal self-attention mechanism. This approach separates the spatial and temporal operations, resulting in VTN being more computationally efficient than other video transformers that process spatio-temporal volumes directly. In order to better adopt the VTN for the sparse and finegrained nature of event data, we design Event-Contrastive Loss (LEC) and event specific augmentations. Proposed LEC promotes learning fine-grained spatial cues in the spatial backbone of VTN by contrasting temporally misaligned frames.

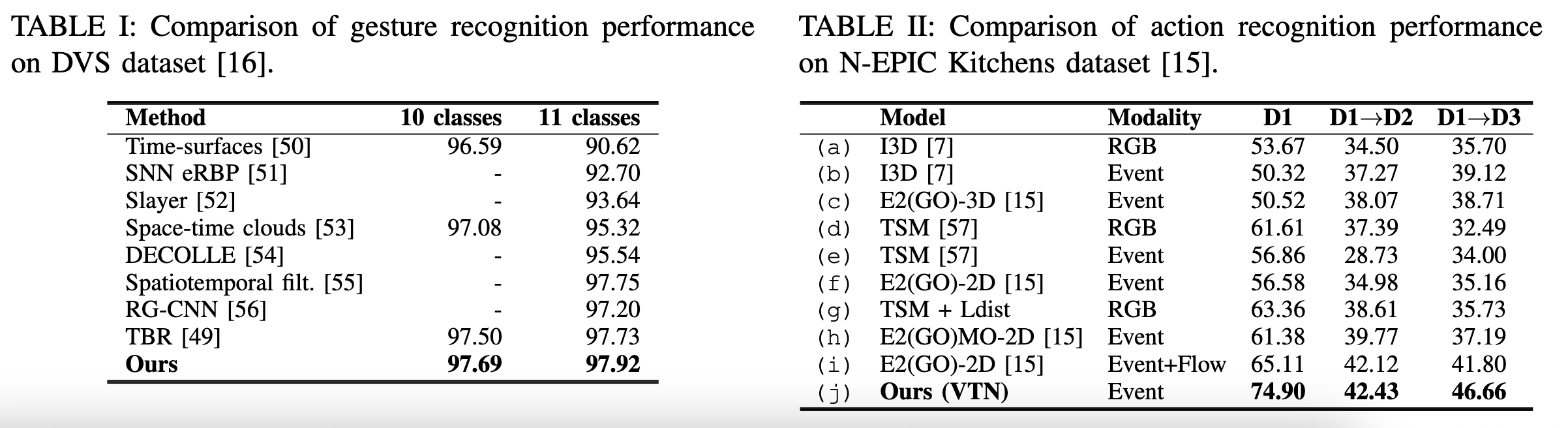

We evaluate our method on real-world action recognition of N-EPIC Kitchens dataset, and achieve state-of-the-art results on both protocols - testing in seen kitchen (74.9% accuracy) and testing in unseen kitchens (42.43% and 46.66% Accuracy). Our approach also takes less computation time compared to competitive prior approaches. We also evaluate our method on the standard DVS Gesture recognition dataset, achieving a competitive accuracy of 97.9% compared to prior work that uses dedicated architectures and image-encoding for the DVS dataset. These results demonstrate the potential of our framework EventTransAct for real-world applications of event-camera based action recognition

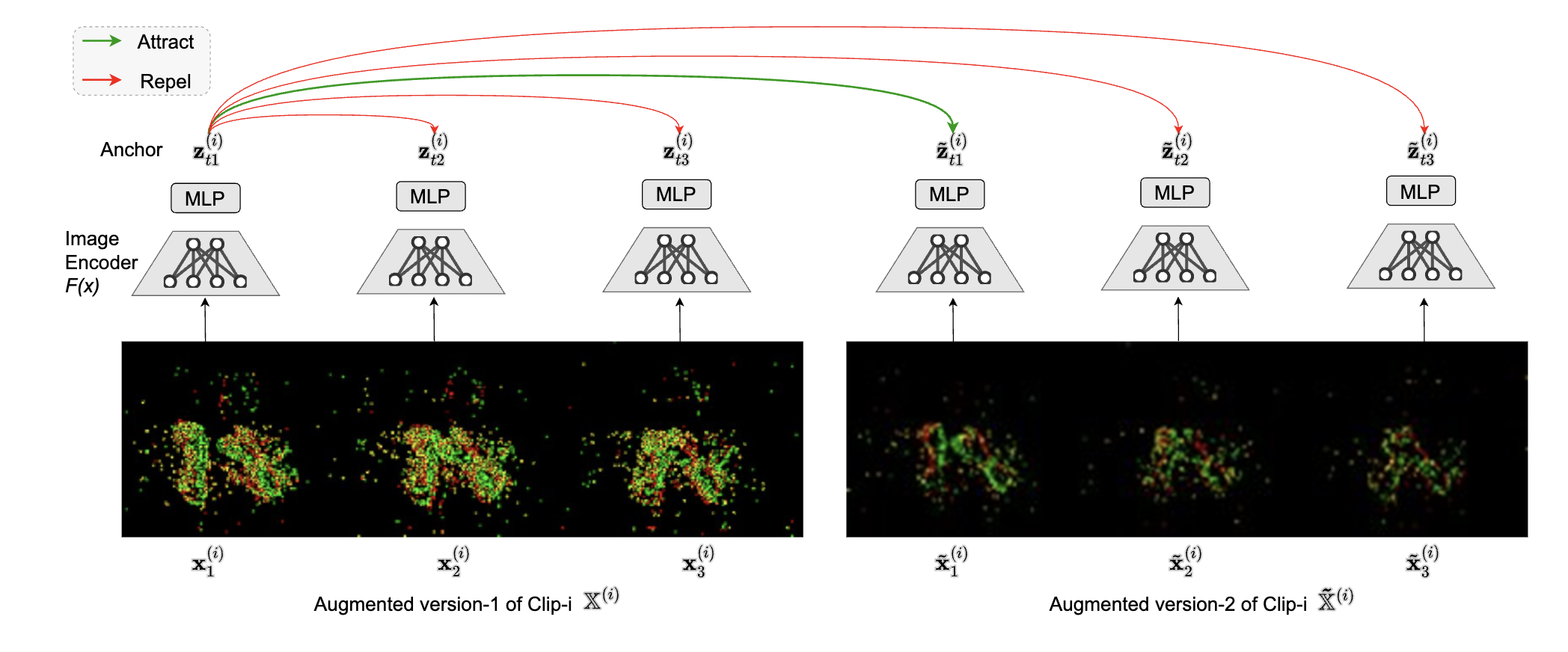

Event Contrastive Learning

Event Contrastive Learning increases temporal-distinctiveness of the spatial embedding F(x) by maximizing the agreement between two differently augmented versions of the same frame, whereas it maximizes the disagreement between temporally misaligned frames. For visualization purpose, only 3 frames per clip are shown.

Method

We employed a Video Transformer Network (VTN) architecture [17], which expands an image encoder F with a transformer E that uses frame embeddings as input tokens. Each event-frame x(i) is passed through a spatial-encoder F(·) to extract spatial features, which are then processed by a transformer-based LongFormer module E(·), augmented with positional embeddings (PE) and a [CLS] token, to learn global temporal dependencies. The [CLS] token output is then classified by head G(·). The model is trained using both cross-entropy loss and a proposed event contrastive loss.

Results

Our experimental results demonstrate the effectiveness of the proposed method for both gesture and real-world action recognition tasks. Particularly, the state-of-the-art performance on the N-EPIC Kitchens dataset, where the model is evaluated on unseen environments, highlights its ability to generalize to novel settings, making it a more reliable choice for deployment.